Upgrading MongoDB using Argo Workflows

At Close, we’re strong advocates for infrastructure automation. Our journey began two years ago when we automated our MongoDB deployment using Ansible, and we've continued to evolve ever since. While those Ansible playbooks were a big step forward, they still required some manual work, like performing safety and performance checks. Our processes were more time-consuming than they needed to be, with lengthy checklists for each routine task.

Our data is thick and so is our MongoDB footprint. However large, it's still a typical MongoDB system. We end up doing the same tasks over a large number of shards. It's possible to automate those in an orchestrated way with a workflow engine where we could define steps and dependencies among them. Apart from that we found a simple, easy, but flexible tool that we could use for other use cases.

Argo Workflows became our tool of choice. It enabled us to leverage our existing efforts without starting from scratch. As a small team, avoiding the need to reinvent the wheel is crucial. Argo Workflows is Kubernetes-native, uses YAML for configuration, supports the features we need, and allows us to run any task within a container. This flexibility is especially valuable for integrating CLI commands from various tools in our stack.

We use Tanka for managing our configurations. Since everything is defined in YAML, we can take advantage of the Argo Workflows Jsonnet library. This allows us to generate the necessary YAML code and execute our workflows seamlessly.

The Basics of Argo Workflows

Argo Workflows is a container-native workflow engine for orchestrating parallel

jobs on Kubernetes. It relies on Kubernetes

Custom Resource Definitions (CRDs)

to define workflows, the main ones being

Workflow and

WorkflowTemplate.

These enable us to reuse functionality using templates with parameters and other

resources.

Workflows use templates, which are not to be confused with WorkflowTemplates. Templates come in two types: Template Definitions (like scripts, containers, and Kubernetes resources) and Template Invocators, which include Steps and DAGs (Directed Acyclic Graphs). Template Invocators define how tasks depend on each other, either in a sequential (Steps) or parallel (DAG) manner. Steps can also run in parallel but without dependencies among them. If you need to manage dependencies you need to use DAG.

Example of a Basic Workflow

Here’s an example of a simple Workflow definition:

apiVersion: argoproj.io/v1alpha1

kind: Workflow

metadata:

generateName: hello-world-

spec:

entrypoint: hello-world

templates:

- name: hello-world

container:

image: busybox

command: [echo]

args: ['hello world']In a Steps template, tasks are defined as a series of steps that can be executed sequentially or in parallel:

- name: three-steps

steps:

- - name: step1

template: prepare-data

- - name: step2a

template: run-data-first-half

- name: step2b # note this is a list, not a list of lists as previous steps

template: run-data-second-halfA DAG template allows tasks to be defined as a graph with dependencies:

- name: diamond

dag:

tasks:

- name: A

template: echo

- name: B

dependencies: [A]

template: echo

- name: C

dependencies: [A]

template: echo

- name: D

dependencies: [B, C]

template: echoFor our use case, Steps work best since most tasks are sequential.

Our Workflow in Action

Our current Workflow definition includes around 90 steps that automate MongoDB maintenance upgrades in our clusters, with no human intervention. Reliability and maintainability were our top priorities—speed was secondary. Given the critical nature of databases, we can’t afford data loss or service disruptions.

We adhered to a few principles while developing this workflow:

- Simplicity: Anyone on the team should quickly understand the workflow’s function and be able to make adjustments. It also needs to be easy to maintain and extend.

- Error Handling: Instead of managing errors, we focus on validating outputs. If a step fails after retries, the workflow halts and sends a Slack notification.

- Metric-Based Validation: Throughout the upgrade process, we monitor specific metrics to ensure that the expected outcomes are met before proceeding.

To aid with validation, we developed Python scripts that perform checks after each database operation.

The workflow orchestrates a variety of tools, including Ansible, Terraform,

Vault, and Git, to carry out MongoDB maintenance. By using WorkflowTemplate

with parameters, we reuse a significant amount of YAML and invoke templates with

varying parameters for different tasks.

Here’s a snippet of our WorkflowTemplate for running an Ansible playbook:

apiVersion: argoproj.io/v1alpha1

kind: WorkflowTemplate

metadata:

name: mongodb-ansible-wftmpl

spec:

templates:

- name: ansible-playbook

inputs:

parameters:

- name: working_dir

- name: service_level

- name: ansible_playbook

- name: ansible_extra_vars

- name: ansible_user

- name: ansible_tags

- name: vault_addr

container:

image: 'close-infra-tools:latest'

command: ['/bin/bash', '-c']

args: |

set -eo pipefail

cd "{{ inputs.parameters.working_dir }}"

ansible-playbook --inventory inventories/{{ inputs.parameters.service_level }} --extra-vars "{{ inputs.parameters.ansible_extra_vars }}" --user {{ inputs.parameters.ansible_user }} {{ inputs.parameters.ansible_tags }} {{ inputs.parameters.ansible_playbook }}In the Workflow, we reference the previous template with all parameters

defined:

apiVersion: argoproj.io/v1alpha1

kind: Workflow

metadata:

generateName: mongodb-upgrade-wf

spec:

entrypoint: mongodb-upgrade

templates:

- name: mongodb-upgrade

inputs:

parameters:

- name: service_level

- name: ansible_user

- name: ansible_tags

- name: artifact_s3_workflow_path

- name: vault_addr

- name: alert_manager_url

- name: prometheus_url

- name: step_by_step

steps:

- - name: run-pre-upgrade

templateRef:

name: mongodb-ansible-wftmpl

template: ansible-playbook

arguments:

parameters:

- name: vault_addr

value: '{{ inputs.parameters.vault_addr }}'

- name: working_dir

value: '~/ansible'

- name: service_level

value: '{{ inputs.parameters.service_level }}'

- name: ansible_playbook

value: 'plays/mongodb_operations/pre-upgrade.yml'

- name: ansible_extra_vars

value: "{'target': 'mongodb-instances'}"

- name: ansible_user

value: '{{ inputs.parameters.ansible_user }}'

- name: ansible_tags

value: '{{ inputs.parameters.ansible_tags }}'We do the same for each tool we need to run in the workflow. Terraform performs some additional actions first we generate the terraform plan and store the output plan in S3 and in the apply phase we feed the plan into the apply command from S3 using Argo Workflows artifacts.

Workflow Execution

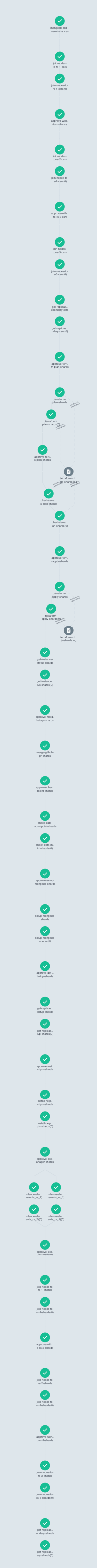

Our workflow consists of several steps, each performing specific operations. It includes retries for transient failures, stores and pulls artifacts in S3 and sends notifications when the workflow fails.

Below is a screenshot showing a partial execution of our MongoDB cluster upgrade workflow:

Conclusion

Automating MongoDB maintenance operations using Argo Workflows has significantly streamlined our processes at Close. By leveraging reusable templates, defining clear dependencies, and integrating with various tools like Ansible and Terraform, we've created a robust and flexible system for managing complex tasks. This automation not only reduces human intervention but also minimizes the risk of errors during critical database upgrades.

As we continue to refine and expand our workflows, we aim to automate even more processes across our infrastructure, allowing us to operate more efficiently while focusing on innovation. Ultimately, automation is a journey, and we look forward to further simplifying our operations while ensuring reliability and scalability.

What’s Next?

We'll keep refining our workflow and expanding Argo Workflows' usage across the team to automate even more processes. And once we automate everything, maybe we’ll finally have some extra free time, right?